Luke Darlow

I am an Artificial Ingelligence Research Scientist at Sakana AI in Tokyo, where I am focused on building the future of artificial intelligence by creating biologically-inspired models that leverage and emulate core aspects of true intelligence. Originally from South Africa, I have spent most of my life learning and researching. My current research has resulted in a new kind of neural network, called the Continuous Thought Machine. My doctoral research focused on representation learning in complex unsupervised settings where neural networks often fail to generalize.

Featured Research

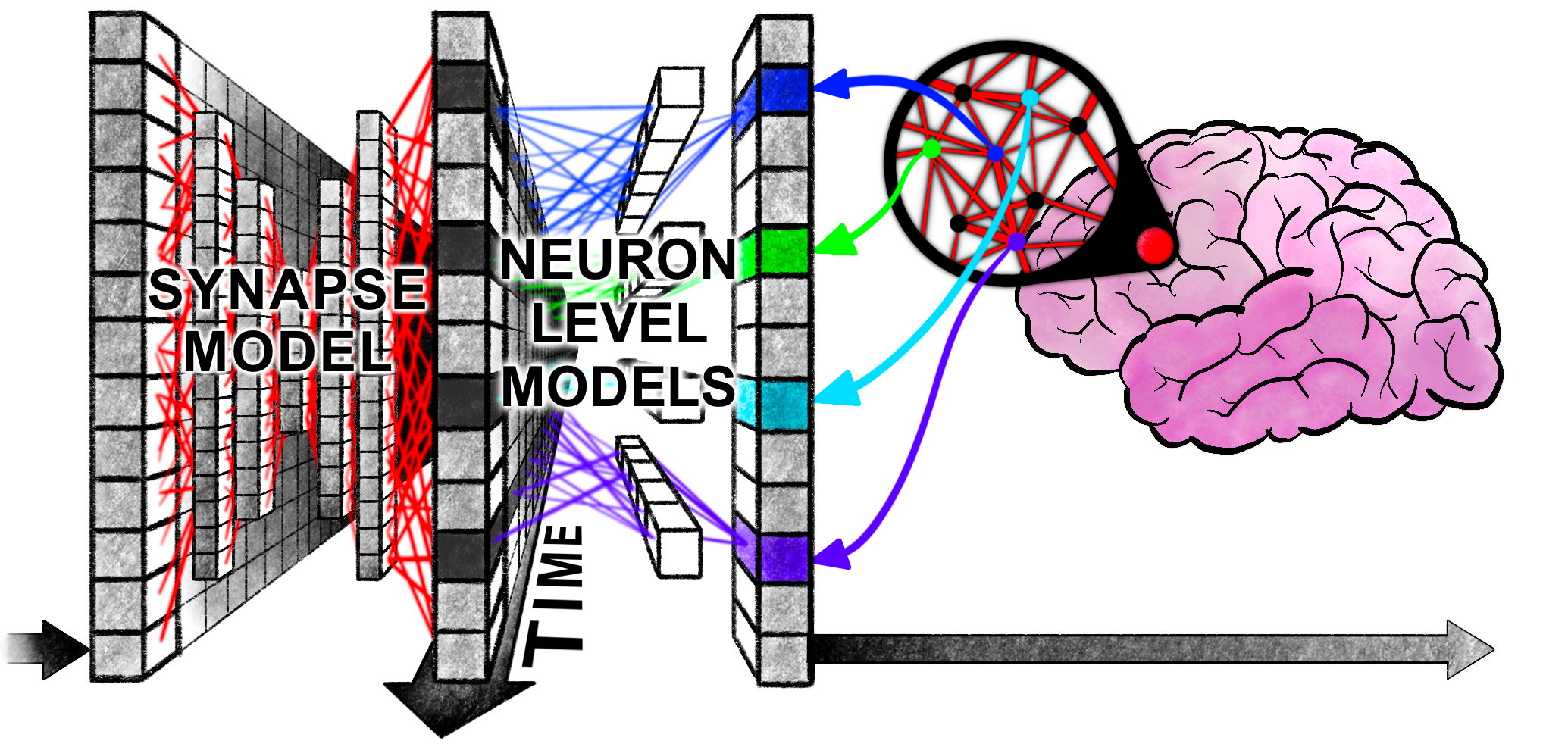

Continuous Thought Machines

The Continuous Thought Machine (CTM) is a novel neural network architecture designed to explicitly incorporate neural timing, the consequential dynamics, and overall neural synchronization as foundational modelling elements. By moving beyond static neuron abstractions while remaining in the gamut of tractable deep learning implementations, the CTM can perform tasks requiring complex sequential reasoning and adaptive computation, where it can "think" longer for more challenging problems. This architecture is built on two core innovations:

- Neuron-Level Temporal Processing: Each neuron uses its own unique weight parameters to process a history of incoming signals, allowing for the emergence of complex, dynamic neural activity.

- Neural Synchronization as a Latent Representation: The model uses the synchronization of neural activity over time as the direct representation for observing the world and producing outputs, a biologically-inspired design choice that enables a new depth of computational capacity.

This work represents a significant step toward more biologically plausible and powerful AI systems.

Other Research Themes

Foundational Models for Time Series Forecasting

During my time at the Systems Infrastructure Lab of Huawei, I designed and developed a foundational forecasting model, DAM, which was the first of its kind to be presented at a high-level conference (ICLR 2024). I supervised interns as they researched complex topics, including understanding common oversights regarding linear forecasting models, which was presented at ICML 2024. I also created FoldFormer, a highly efficient transformer model currently deployed in Huawei's cloud infrastructure for forecasting resource demand.

PhD Research

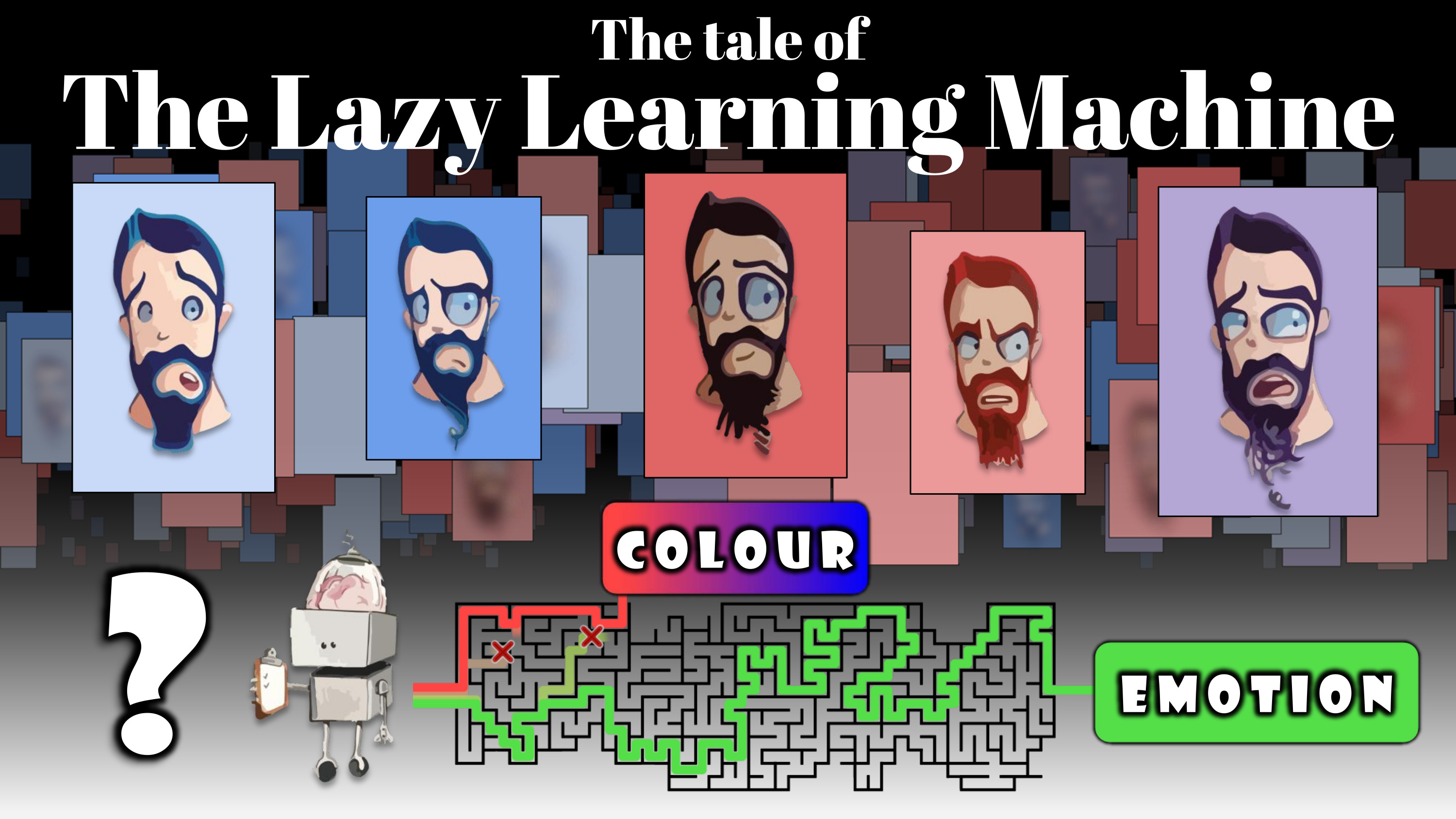

My PhD thesis at the University of Edinburgh, titled "Learning reliable representations when proxy objectives fail," investigated why deep neural networks often learn unreliable or non-robust solutions. This happens when a model trained on a substitute (proxy) task learns 'shortcuts' based on easy-to-compute features (e.g., background color) instead of the complex features (e.g., object shape) needed for true generalization. I distilled this core problem into a talk, 'The Tale of the Lazy Learning Machine,' for the University of Edinburgh's Three Minute Thesis (3MT) competition, where I competed at the university-wide level.

My thesis introduced three novel methods to mitigate this problem:

- Deep Decision Tree Layer (DDTL): A method for semantic hashing that prevents over-compression by composing hash codes from both supervised (class-based) and unsupervised (contrastive) parts, ensuring an efficient and well-distributed use of the available hash space.

- Deep Hierarchical Object Grouping (DHOG): An approach that improves deep clustering by forcing a network to find a diverse hierarchy of solutions, preventing it from settling on a single, simple grouping based on trivial features.

- Latent Adversarial Debiasing (LAD): A technique that removes spurious correlations from training data. It uses a VQ-VAE to access the data manifold and performs an adversarial walk in the latent space to generate new, de-biased training images.

During my PhD, I also developed GINN (Geometric Illustration of Neural Networks), an interactive tool to build intuition for how neural networks function.

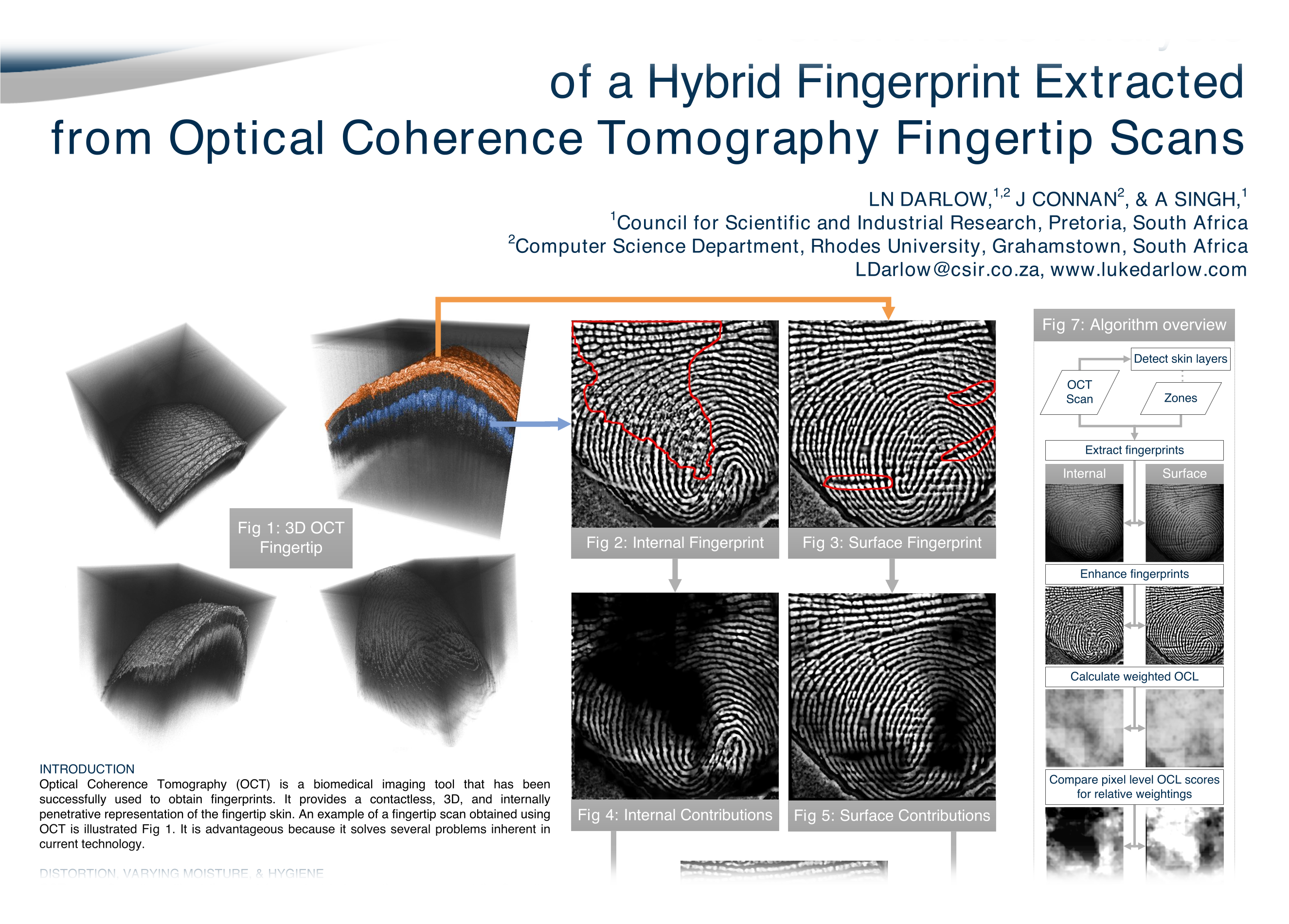

Biometrics & Subsurface Fingerprint Analysis

My early research at CSIR in South Africa involved pioneering work in biometrics. I designed computer vision models to extract features from fingerprints and developed novel algorithms to extract usable subsurface fingerprints from 3D Optical Coherence Tomography (OCT) scans in real-time. This work led to 10 publications in the span two years, tangible physical and digital outputs that were presented to high-level dignitaries including the the minister of science and technology of South Africa, and several awards, including a 'best poster award' (shown below) at the top biometrics conference, ICB, in 2016.

A complete list of my publications can be found on my Google Scholar profile.

Contact

I am always open to discussing new ideas, collaborations, or interesting opportunities. Please feel free to reach out on X or LinkedIn.